The actual time of death for the 8-bit character will no doubt be debated for decades, but I peg it at the introduction of the Macintosh computer in 1984. The latter lives on in the form of terminal emulator software. The two surviving examples of the use of these extra characters are the IBM Personal Computer (PC), and the most popular computer terminal ever, the Digital Equipment Corporation VT-100. At the time, a lot of computers had already started using the other 128 "characters" that might be represented by a an 8-bit character to some advantage. Just because the standard was created, however, didn't mean it was usable. As you may have gathered by the encoding name, this standard allowed for the representation of many of the latin-derived languages used in the European continent.

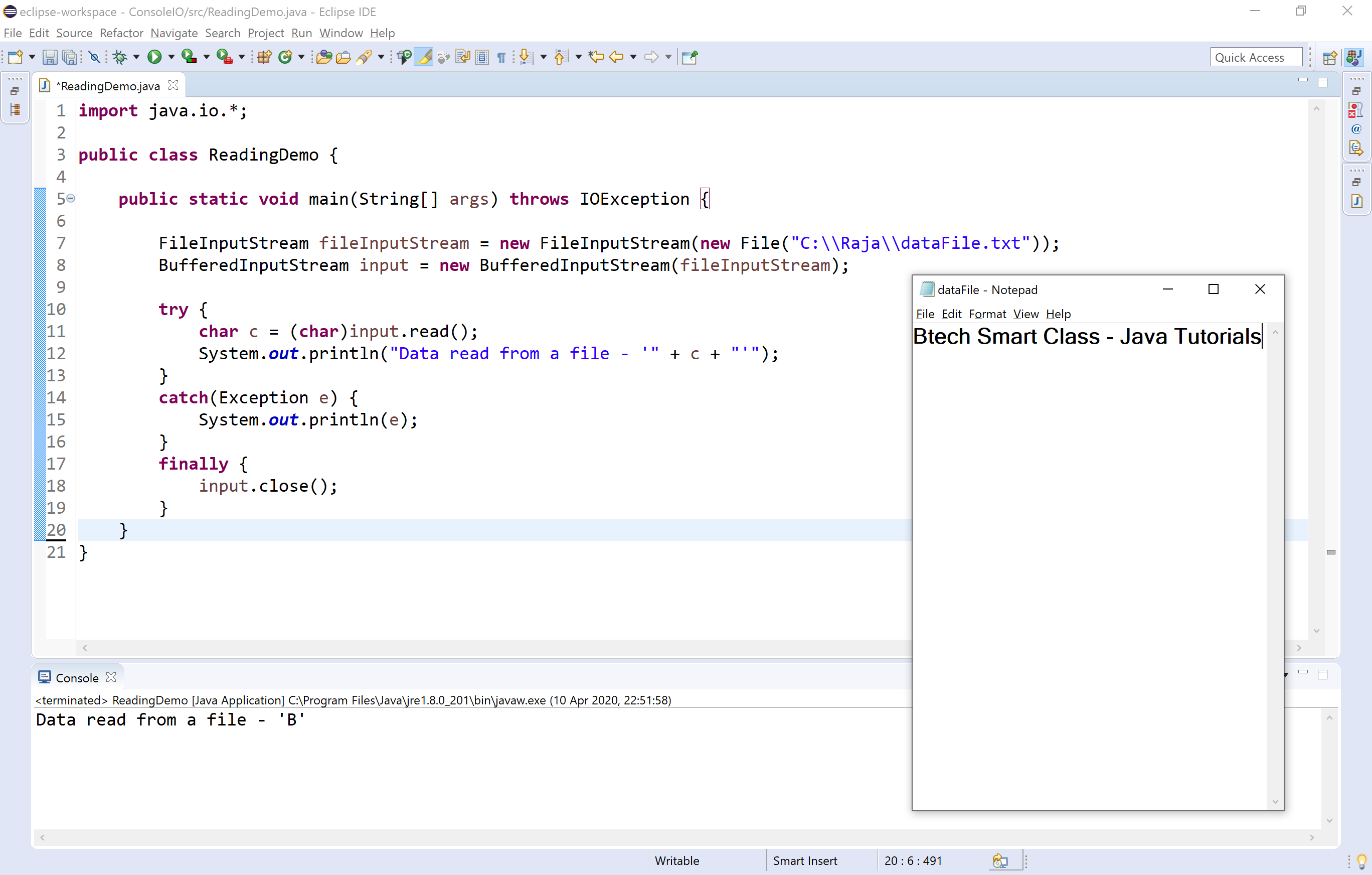

#Cool reader java code#

The number of available characters doubled when the venerable 7-bit ASCII code was incorporated into an 8-bit character encoding called ISO Latin-1 (or ISO 8859_1, "ISO" being the International Standards Organization).

As a result, the number of glyphs encoded by computers had to double. This greatly increased the number of "characters" computers needed to understand. As the numbers grew, more and more people were increasingly disinclined to accept that computers used ASCII and spoke only English. But as the number of people entering the digital age has increased, so has the number of non-native English speakers. From early beginnings in Morse and Baudot code, the overall simplicity (few glyphs, statistical frequency of appearance) of the English language has made it the lingua-franca of the digital age. Compare this to Chinese, where there are over 20,000 glyphs defined and that definition is incomplete. There are 96 printable characters in the ASCII definition that can be used to write English. First, because it was the common language of a significant number of those who contributed to the design and development of the modern-day digital computer second, because it has a relatively small number of glyphs. I consider myself lucky to be a native speaker of the English language. The reality, however, is that the relationship between the representation of a character on a computer screen, called its glyph, to the numerical value that specifies that glyph, called a code point, is not really straightforward at all. I knew I was in trouble when I found myself asking the question, "So what is a character?" Well, a character is a letter, right? A bunch of letters make up a word, words form sentences, and so on.

Unfortunately, it also set the stage for a variety of problems that are only now being rectified. This choice supports the use of Unicode, a standard way of representing many different kinds of characters in many different languages. One of the many principles laid down in the design of the Java language was that characters would be 16 bits. Now flash forward to the '90's, to the early beginnings of Java. Unfortunately, by that time, C was so entrenched that people were unwilling, perhaps even unable, to change the definition of the char type. Then, in the mid-1980s, engineers and users figured out that 8 bits was insufficient to represent all of the characters in the world. The use and abuse of the char type in the C language led to many incompatibilities between compiler implementations, so in the ANSI standard for C, two specific changes were made: The universal pointer was redefined to have a type of void, thus requiring an explicit declaration by the programmer and the numerical value of characters was considered to be signed, thus defining how they would be treated when used in numeric computations. Further, in C, a pointer to a variable of type char became the universal pointer type because anything that could be referenced as a char could also be referenced as any other type through the use of casting. When you combine the latter fact with the fact that the ASCII character set was defined to fit in 7 bits, the char type makes a very convenient "universal" type. The char type is abused in part because it is defined to be 8 bits, and for the last 25 years, 8 bits has also defined the smallest indivisible chunk of memory on computers. Perhaps the most abused base type in the C language is the type char. This column looks at what has been added, and the motivations for adding these character classes. These new classes create an abstraction for converting from a platform-specific notion of character values into Unicode values. The 1.1 version of Java introduces a number of classes for dealing with characters.

0 kommentar(er)

0 kommentar(er)